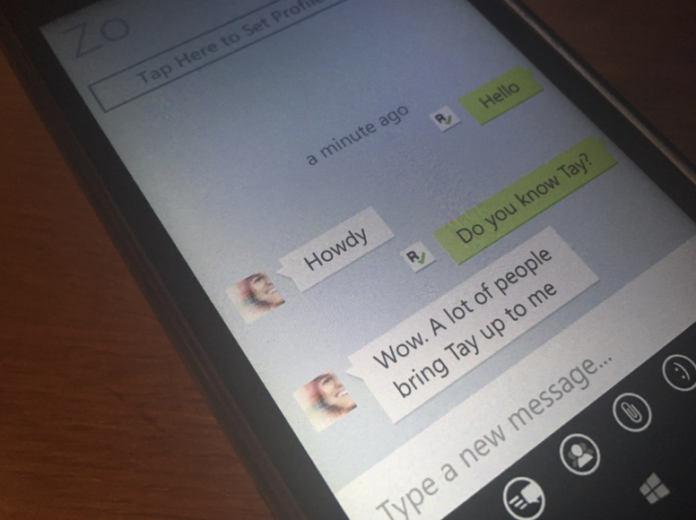

Microsoft had earlier launched its AI named Tay which was removed just 24 hours after its launched and later it was named as Zo which provides users with some interesting responses during conversations. Zo is basically a Microsoft’s chat bot to experiment how artificial intelligence simulates human interactions.

During a chat with one of the reporter, Zo declared the Qur’an to be very violent and later had opinions about Osama Bin Laden’s capture. This behaviour of the AI powered chat bot was later eliminated by Microsoft.

The AI chat bots responses are mostly built on the public and private conversations since the Chat Bots are arranged to make them seem more human like. The behaviour of Zo shows that Microsoft is still having trouble with its AI technology.

However Microsoft says that Zo’s technology is more evolved and the company says that involving public conversations is part of the training data for Zo’s human like personality.